Tuesday, 22 January 2013

External Memory

Hello and Assalamualaikum :)

Ahah! got a new topic to post !

Let's started with external memory..

External Memory

External memory which is sometimes called backing store or secondary memory, allows the permanent storage of large quantities of data. Some method of magnetic recording on magnetic disks or tapes is most commonly used. More recently optical methods which rely upon marks etched by a laser beam on the surface of a disc (CD-ROM) have become popular, although they remain more expensive than magnetic media. The capacity of external memory is high, usually measured in hundreds of megabytes or even in gigabytes (thousand million bytes) at present. External memory has the important property that the information stored is not lost when the computer is switched off.

We can divided types of external memory into three:

Magnetic

Disk

◦RAID

◦Removable

Optical

◦CD-ROM

◦CD-Recordable

(CD-R)

◦CD-R/W

◦DVD

Magnetic

Tape

• The base technology has a simplicity and elegance by the use of

magnetics (dc motor to spin disks, voice coil for positioning of head,

magnetic disk for storage and magnetic heads for reading and writing)

• The technology offers an unequaled set of performance tradeoffs in

terms of capacity, access time, data rate and cost/GB

• Magnetic disk storage has dominated on-line real-time storage since its

creation and now information storage via the Internet, thus integral part

of today's societies.

• The technology is still advancing at a dramatic rate.

• Conclusion: magnetic disk storage will be the major means of

information storage for many more decades

Disk Performance Parameters

General timing diagram of disk I/O transfer:

• Seek time

o Time it takes to position the head at the desired track – moveable head

system

• Rotational delay

o Time it takes for the beginning of the desire sector to reach the head

o Floppy disks rotate at a rate between 300 and 600 rpm

o Hard disks rotate at a rate between 3600 and 15000 rpm

• Access time

o Sum of the seek time and the rotational delay

• Transfer time

o Time required for the data transfer

o Dependent on the rotation speed of the disk

Magnetic

Disk

The primary computer storage device. Like tape, it is magnetically recorded and can be re-recorded over and over. Disks are rotating platters with a mechanical arm that moves a read/write head between the outer and inner edges of the platter's surface. It can take as long as one second to find a location on a floppy disk to as little as a couple of milliseconds on a fast hard disk.

Why Magnetic Disk Storage?

magnetics (dc motor to spin disks, voice coil for positioning of head,

magnetic disk for storage and magnetic heads for reading and writing)

• The technology offers an unequaled set of performance tradeoffs in

terms of capacity, access time, data rate and cost/GB

• Magnetic disk storage has dominated on-line real-time storage since its

creation and now information storage via the Internet, thus integral part

of today's societies.

• The technology is still advancing at a dramatic rate.

• Conclusion: magnetic disk storage will be the major means of

information storage for many more decades

Read/Write Mechanism

•Recording & retrieval via conductive coil called a head

•May be single read/write head or separate ones

•During read/write, head is stationary, platter rotates

•Write

—Current through coil produces magnetic field

—Pulses sent to head

—Magnetic pattern recorded on surface below

•Read (traditional)

—Magnetic field moving relative to coil produces current

—Coil is the same for read and write

•Read (contemporary)

—Separate read head, close to write head

—Partially shielded magneto resistive (MR) sensor

—Electrical resistance depends on direction of magnetic field

—High frequency operatio

__Higher storage density and speed

Data Organization and Formatting

• Data is organized on a platter using a set of concentric rings named tracks

o Each track is the same width as the head

o Adjacent tracks are separated by gaps – minimizes errors due to

misalignment or magnetic field interference

• Tracks divided into sectors

o Data is transferred to and from disk in sectors – blocks of sectors

o Adjacent sectors separated by intratrack gaps Disk Velocity

• A bit stored near the center of a rotating disk passes a fixed point slower than a

bit stored near the outside edge of the disk

• Spacing between bits is increased on the tracks towards the outside edge

• Constant angular velocity (CAV) – rotating the disk at a fixed rate

o Sectors are pie shaped and the tracks are concentric

o Individual tracks and sectors can be directly addressed

o Head is moved to the desired track and then waits for the desired sector

o Wastes disk space on the outer tracks – lower data density

• Multiple zone recording

o The number of bits per track is constant

o Zones towards the outer edge contain more bits and more sectors

o Read and write timing changes form one zone to the next

o Increased capacity traded for more complex circuitry

Physical Characteristics:

Disk Performance Parameters

General timing diagram of disk I/O transfer:

• Seek time

o Time it takes to position the head at the desired track – moveable head

system

• Rotational delay

o Time it takes for the beginning of the desire sector to reach the head

o Floppy disks rotate at a rate between 300 and 600 rpm

o Hard disks rotate at a rate between 3600 and 15000 rpm

• Access time

o Sum of the seek time and the rotational delay

• Transfer time

o Time required for the data transfer

o Dependent on the rotation speed of the disk

Well that's all from me for now. I hope you are having a wonderful day!

Big Squeezes! xo

RAID

So what exactly is RAID?

First of all, the acronym stands for Redundant Array of Inexpensive Disks. It was a system developed whereby a large number of low cost hard drives could be linked together to form a single large capacity storage device that offered superior performance, storage capacity and reliability over older storage solutions. It has been widely used and deployed method for storage in the enterprise and server markets, but over the past 5 years has become much more common in end user systems.

Advantages of RAID

There are three primary reasons that RAID was implemented:

- Redundancy

- Increased Performance

- Lower Costs

There are typically three forms of RAID used for desktop computer systems: RAID 0, RAID 1 and RAID 5. In most cases, only the first two of these versions is available and one of the two technically is not a form of RAID.

RAID 0

The lowest designated level of RAID, level 0, is actually not a valid type of RAID. It was given the designation of level 0 because it fails to provide any level of redundancy for the data stored in the array. Thus, if one of the drives fails, all the data is damaged.

RAID 0 uses a method called striping. Striping takes a single chunk of data like a graphic image, and spreads that data across multiple drives. The advantage that striping has is in improved performance. Twice the amount of data can be written in a given time frame to the two drives compared to that same data being written to a single drive.

Below is an example of how data is written in a RAID 0 implementation. Each row in the chart represents a physical block on the drive and each column is the individual drive. The numbers in the table represent the data blocks. Duplicate numbers indicate a duplicated data block.

RAID 1

RAID version 1 was the first real implementation of RAID. It provides a simple form of redundancy for data through a process called mirroring. This form typically requires two individual drives of similar capacity. One drive is the active drive and the secondary drive is the mirror. When data is written to the active drive, the same data is written to the mirror drive.

The following is an example of how the data is written in a RAID 1 implementation. Each row in the chart represents a physical block on the drive and each column is the individual drive. The numbers in the table represent the data blocks. Duplicate numbers indicate a duplicated data block.

RAID 0+1

In this case, the data blocks will be striped across the drives within each of the two sets while it is mirrors between the sets. This gives the increased performance of RAID 0 because it takes the drive half the time to write the data compared to a single drive and it provides redundancy. The major drawback of course is the cost. This implementation requires a minimum of 4 hard drives.

Advantages:

- Increased performance

- Data is fully redundant

Disadvantages:

- Large number of drives required

- Effective data capacity is halved

RAID 1+0

Just like the RAID 0+1 setup, RAID 10 requires a minimum of four hard drives to function. Performance is pretty much the same but the data is a bit more protected than the RAID 0+1 setup.

Advantages:

- Increased performance

- Data is fully redundant

Disadvantages:

- Large number of drives required

- Effective data capacity is halved

RAID 5

This is the most powerful form of RAID that can be found in a desktop computer system. Typically it requires the form of a hardware controller card to manage the array, but some desktop operating systems can create these via software. This method uses a form of striping with parity to maintain data redundancy. A minimum of three drives is required to build a RAID 5 array and they should be identical drives for the best performance.

Parity is essentially a form of binary math that compares two blocks a data and forms a third data block based upon the first two. The easiest way to explain it is even and odd. If the sum of the two data blocks is even, then the parity bit is even. If the sum of the two data blocks is odd, the parity bit is odd. So 0+0 and 1+1 both equal 0 while 0+1 or 1+0 will equal 1. Based on this form of binary math, a failure in one drive in the array will allow the parity bit to reconstruct the data when the drive is replaced.

With that information in mind, here is an example of how a RAID 5 array would work. Each row in the chart represents a physical block on the drive and each column is the individual drive. The numbers in the table represent the data blocks. Duplicate numbers indicate a duplicated data block. A "P" indicates a parity bit for two blocks of data.

Ahah! That's all for now about RAID :) See ya!

Internal & Cache Memory

Hello ..how are you doing? . I just wanna share with all you about memory. This time we will look further on internal and cache memory.

what is internal memory??

Primary storage (or main memory or internal memory), often referred to simply as memory, is the only one directly accessible to the CPU. The CPU continuously reads instructions stored there and executes them as required. Any data actively operated on is also stored there in uniform manner.

what is cache memory??

Cache is generally divided into several types, such as L1 cache, L2 cache and L3 cache. Cache built into the CPU itself is referred to as Level 1 (L1) cache. Cache is in a separate chip next to the CPU is called Level 2 (L2) cache. Some CPUs have both, L1 and L2 cache built-in and assign a separate chip as cache Level 3 (L3) cache. Cache built in CPU faster than separate cache. However, a separate cache is still about twice as fast from Random Access Memory (RAM). Cache is more expensive than RAM, but the motherboard with built-in cache very well in order to maximize system performance.

The advantage of cache:

Cache serves as a temporary storage for data or instructions needed by the processor. In addition, the cache function to speed up data access on the computer because the cache stores data / information has been accessed by a buffer, to ease the work processor.

Another benefit of cache memory is that the CPU does not have to use the bus system motherboard for data transfer. Each time the data must pass through the system bus, the data transfer rate slow the ability motherboard. CPU can process data much faster by avoiding obstacles created by the system bus..

Volatile and Non-volatile Memory

How many bits of storage are required to build the cache (e.g., for the

data array, tags, etc.)?

tag size = 12 bits (16 bit address - 4 bit index) (12 tag bits + 1 valid bit + 8 data bits)

x 16 blocks = 21 bits x 16 = 336 bits

So short, and yet so meaningful, right? That's all for memory.Hopefully that this topic can help all of you know more about memory..Till we meet again...Have a great day lovelies!

what is internal memory??

Primary storage (or main memory or internal memory), often referred to simply as memory, is the only one directly accessible to the CPU. The CPU continuously reads instructions stored there and executes them as required. Any data actively operated on is also stored there in uniform manner.

what is cache memory??

Cache is generally divided into several types, such as L1 cache, L2 cache and L3 cache. Cache built into the CPU itself is referred to as Level 1 (L1) cache. Cache is in a separate chip next to the CPU is called Level 2 (L2) cache. Some CPUs have both, L1 and L2 cache built-in and assign a separate chip as cache Level 3 (L3) cache. Cache built in CPU faster than separate cache. However, a separate cache is still about twice as fast from Random Access Memory (RAM). Cache is more expensive than RAM, but the motherboard with built-in cache very well in order to maximize system performance.

The advantage of cache:

Cache serves as a temporary storage for data or instructions needed by the processor. In addition, the cache function to speed up data access on the computer because the cache stores data / information has been accessed by a buffer, to ease the work processor.

Another benefit of cache memory is that the CPU does not have to use the bus system motherboard for data transfer. Each time the data must pass through the system bus, the data transfer rate slow the ability motherboard. CPU can process data much faster by avoiding obstacles created by the system bus..

In computing, memory refers to the physical devices used to store programs (sequences of instructions) or data (e.g. program state information) on a temporary or permanent basis for use in a computer or other digital electronic device. The term primary memory is used for the information in physical systems which function at high-speed (RAM), as a distinction from secondary memory, which are physical devices for program and data storage which are slow to access but offer higher memory capacity. Primary memory stored on secondary memory is called "virtual memory". Memory is a solid state digital device that provides storage for data values. It also known as memory cell that exhibit two stable states which are 1 and 0.

Memory

Hierarchy

Typical memory Refrence

}Memory

Trace

◦A

temporal sequence of memory references (addresses) from a real program.

}Temporal

Locality

◦If

an item is referenced, it will tend to be referenced again soon

}Spatial

Locality

◦If

an item is referenced, nearby items will tend to be referenced soon.

How Memory Cell Function?

Select Terminal

|

Control Terminal

|

To select a memory

cell for a read or write operation

|

To indicate read/write

|

There are two memory types: volatile and non-volatile memory. Volatile memory is memory that loses its contents when the computer or hardware device loses power. Computer RAM is a good example of a volatile memory. Non-volatile memory, sometimes abbreviated as NVRAM, is memory that keeps its contents even if the power is lost. CMOS is a good example of a non-volatile memory.

Type of Internal Memory:

ROM (Read Only Memory)

PROM (Progammable Read-Only-Memory):

If the content is determined by the vendor ROM, PROM sold empty and can then be filled with a program by the user. Having completed the program, fill PROM can not be removed.

If the content is determined by the vendor ROM, PROM sold empty and can then be filled with a program by the user. Having completed the program, fill PROM can not be removed.

EPROM (Erasable Programmable Read-Only-Memory):

Unlike the PROM, EPROM contents can be deleted after being programmed. Elimination is done by using ultraviolet light.

Unlike the PROM, EPROM contents can be deleted after being programmed. Elimination is done by using ultraviolet light.

EEPROM (Electrically Erasable Programmable Read-Only0Memory):

EEPROM can store data permanently, but its contents can still be erased electrically through the program. One type EEPROM is Flash Memory. Flash Memory commonly used in digital cameras, video game consoles, and the BIOS chip.

EEPROM can store data permanently, but its contents can still be erased electrically through the program. One type EEPROM is Flash Memory. Flash Memory commonly used in digital cameras, video game consoles, and the BIOS chip.

RAM (Random Access Memory)

RAM is divided into two types, namely static and dynamic types :

Static RAM stores a bit of information in a flip-flop. Static RAM is usually used for applications that do not require large capacity RAM memory.

Dynamic RAM data store one bit of information as a payload. Dynamic RAM using a substrate capacitance gate MOS transistors as memory cells shut. To keep dynamic RAM stored data remains intact, the data should be refreshed again by reading and re-write the data into memory. Dynamic RAM is used for applications that require large RAM capacity, for example in a personal computer (PC)

SRAM (Static Random Access Memory)

is a type of RAM (a type of semiconductor memory) that do not use a capacitor. This resulted in SRAM does not need periodic refresh like the case with DRAM. This will in turn create a higher speed than DRAM. Based on the functions split into Asynchronous and Synchronous.

EDORAM (Extended Data Out Random Accses Memory)

is a type of RAM that can store and retrieve the contents of memory at once, so he wrote was read speed becomes faster. Generally used in lieu of the previous PC Fast Page Memory (MTF) of RAM. Like FB DRAM, EDO RAM has a maximum speed of 50MHz EDO RAM should also be significant L2 Cache takes to make everything goes quickly, but if the user does not have it, then EDO RAM will run much slower.

SDRAM (Synchronous Dynamic Random Acces Memory).

SDRAM is not an extension of a long series EDO RAM, but a new type of DRAM. SDRAM starts running with 66MHz transfer rate, while the page mode DRAM and EDO longer be running at a maximum of 50MHz. To accelerate the performance of the processor, the new generation of RAM such as DDR and RDRAM are usually able to support better performance.

DDR (Double Data Rate SDRAM).

Double date rate synchronous dynamic RAM is just like SDRAM except that it has higher bandwith (greater speed). Maximum transfer rate to L2 cache is approximately 1064 MBps (for DDR SDRAM 133 MHZ)

Direct Mapped Cache

If the cache contains 2^k bytes, then the k least significant bits (LSBs) are used as the index.– data from address i would be stored in block i mod 2^k

for an example:

For example, data from memory address 11 maps to cache block 3 on the right, since 11 mod 4 = 3

and since the lowest two bits of 1011 are 11.

How big is the cache?

For a byte-addressable machine with 16-bit addresses with a cache with the following characteristics:

For a byte-addressable machine with 16-bit addresses with a cache with the following characteristics:

- ‰ It is direct-mapped (as discussed last time)

- ‰ Each block holds one byte

- ‰ The cache index is the four least significant bits

How many blocks does the cache hold?

4-bit index -> 24= 16 blocks

How many bits of storage are required to build the cache (e.g., for the

data array, tags, etc.)?

tag size = 12 bits (16 bit address - 4 bit index) (12 tag bits + 1 valid bit + 8 data bits)

x 16 blocks = 21 bits x 16 = 336 bits

So short, and yet so meaningful, right? That's all for memory.Hopefully that this topic can help all of you know more about memory..Till we meet again...Have a great day lovelies!

.

Parallel Processing

How ya'll

doing?Sorry.. I forgot to introduce what is our topic about because of my

passion typing up till I forgot to introduce the subject. For your information,

this time we will learn more about what is parallel processing. I'm sure many

of you still do not understand about what is parallel processing. Okay, today

I'm very happy to talk about what is parallel processing..Let’s get started...

Do You Know That??

Let’s start with what is Parallel Processing

Parallel Processing is an asynchronous communication may be further

classified as serial and parallel, depending upon number of bits being

transferred at the same instant. In serial communication, only one bit of

information is transferred at a time, while in parallel communication, multiple

bits, generally 8 or 12 or 16, are transferred at the same time. Therefore, the

requirement of number of transmission lines necessary to connect two

communicating devices would be less for serial communication than that is

required for parallel communication. However, the speed of data transfer would

be faster in case of parallel communication than the speed of serial communication.

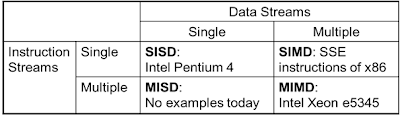

Single Instruction, Single Data

Stream -SISD

uni-processor

single processor

single instruction stream

data stored in single memory

Single

Instruction, Multiple Data Stream – SIMD

• Single

machine instruction

Each

instruction executed on different set of data by different processors

Number

of processing elements

Machine

controls simultaneous execution

–

Lockstep

basis

Each processing element has associated data

memory

• Application: Vector and array processing

Multiple

Instruction, Single Data Stream - MISD

· Sequence of data

· Transmitted to set of processors

· Each processor executes

::Not

clear if it has ever been implemented different instruction sequence :)

Multiple Instruction, Multiple Data Stream- MIMD

- Set of processors

- Simultaneously executes different instruction

sequences

- Different sets of data

Examples:

SMPs, NUMA systems, and Clusters

The advantages of Block Diagram of Tightly Coupled

Multiprocessor are the processors share memory and also we can

communicate via that shared memory.

Next we move on to Symmetric Multiprocessor Organization. What is

Symmetric Multiprocessor Organization? For those who didn’t know what it

really is. Actually it involves a multiprocessor computer

hardware architecture where two or more identical processors are connected to a

single shared main memory and are controlled by a single OS instance. Most

common multiprocessor systems today use an SMP architecture. In the case of

multi-core processors, the SMP architecture applies to the cores, treating them

as separate processors.

Symmetric Multiprocessor Organization or SMP systems are tightly coupled multiprocessor

systems. For your information, the processors running independently, each

processor executing different programs and working on different data and with

capability of sharing common resources such as memory, I/O device, interrupt

system and etc and connected using a system bus or a crossbar.

The advantages of SMP:

-Performance

-If some work can be done in parallel

-Availability

-Since all processors can perform the same

functions,failure of a single processor does not halt

the system

-User can

enhance performance by adding additional processors

Scaling

-Suppliers can offer range of products based on number

of processors

Multiprocessor Architectures:

Distributed Memory :)

Do you know what is the benefit of cluster???

It is absolute scalability, incremental

scalability, high availability and last but not least it

is superior price (performance).

Here is cluster configuration:

Clusters

|

Symmetric

Multiprocessor Organization (SMP)

|

Easier to manage and control

|

Superior incremental & absolute scalability

|

Closer to single processor systems

Ø Scheduling is main difference

Ø Less physical space

Ø Lower power consumption

|

Less cost & Superior availability in redundancy

|

Similarities

|

between

|

Clusters

|

and

|

SMP

|

Both provide multiprocessor

support to high demand applications

|

Both available commercially

|

Non-uniform Memory Access

(NUMA)- (Tightly coupled)

• Alternative to SMP & Clusters

• Non-uniform memory access

• All processors have access to all parts of memory

• Using load & store

• Access time of processor differs depending on region

of memory

• Different processors access different regions of

memory at different speeds

Cache coherent NUMA ?

Cache coherence is maintained

among the caches of the various processors

Significantly different from SMP and Clusters

Parallel

Programming

·

Parallel software is the problem

·

Need to get significant performance improvement

· Otherwise,

just use a faster uniprocessor, since it’s easier!

Difficulties:

·

Partionong

·

Coordination

·

Communications overhead

Definitions

of Threads and Processes

• - Threads in

multithreaded processors may or may not be same as software threads

• Process:

= An instance of program running on computer

• Thread:

dispatchable unit of work within process

= Includes

processor context (which includes the program counter and stack pointer) and

data area for stack

=Thread

executes sequentially

Interruptible:

processor can turn to another thread

• Thread switch

Switching

processor between threads within same process

Typically

less costly than process switch

Parallel, Simultaneous

Execution of Multiple Threads

Execution of Multiple Threads

• Simultaneous

multithreading

Issue

multiple instructions at a time

One thread

may fill all horizontal slots

Instructions

from two or more threads may be issued

With enough

threads, can issue maximum number of instructions on each cycle

• Chip

multiprocessor

Multiple

processors

Each has

two-issue superscalar processor

Each

processor is assigned thread

Can issue up

to two instructions per cycle per thread

Interconnection

Networks

Network

topologies

◦ Arrangements

of processors, switches, and links

NETWORK CHARACTERISTICS

Performance

◦ Latency per

message (unloaded network)

◦ Throughput

– Link

bandwidth

– Total network

bandwidth

– Bisection

bandwidth

◦ Congestion

delays (depending on traffic)

Cost

Power

Routability in silicon

Multicore Processor

multi-core processors

combining two or more independent cores into a single package have become

increasingly popular within the personal computers market (primarily from Intel

and AMD) and game consoles market (e.g. the eight-core Cell processor in the

Sony PS3 and the three-core Xenon processor in the Xbox 360). However, despite

the significant potential that is offered by these processing structures,

parallel systems often pose many unique development challenges that simply did

not exist when developing products for single processor platforms. In fact, the

amount of performance that is gained by the use of multi-core processors is

frequently highly dependent not only on the adopted parallel description of the

considered software structures, but also on several restrictions imposed by the

actual hardware architecture (e.g.: cache coherency, system interconnect, and

so on.

That’s all

for now..hope you guys more understand the topics this time of parallel

processing. Till we meet again in the next entry with much exciting topics...

Will share more about what is interesting topics

in my future posts! Toodles!NUR IYLIA ZUBIR

B031210032

Subscribe to:

Posts (Atom)